One of the biggest problems in AI and machine learning is that models need a lot of data to fully understand them. Most AI systems need a lot of named data, whether they're used for language processing, picture recognition, or prediction analytics.

But gathering and organizing such huge information costs a lot of money and takes a lot of time. It is where transfer learning really shines, making it possible for AI to learn faster with a lot less input. This post will go into more detail about the idea of transfer learning and look at how it helps AI systems do better and need less data, which speeds up the training process.

What Is Transfer Learning?

A machine learning model is trained on one task and then changed to solve a different but related task. It is what transfer learning is all about. Transfer learning uses what you know from one task to make the next task easier to learn and faster to do. This way, you don't have to start from scratch every time you want to train a new model.

Think of it like a student who has already learned the basics of mathematics. When asked to learn a new subject, like physics, they don’t have to start from zero because the mathematical skills they’ve acquired can be applied to solve problems in physics. Similarly, AI models trained using transfer learning can “transfer” the Knowledge they’ve gained from one task to another.

Why Transfer Learning Helps AI Learn Faster

Traditional machine learning models need massive datasets to train effectively. For example, to train a deep learning model to recognize cats and dogs in images, millions of labeled pictures are required to achieve good accuracy. But what if you don’t have millions of images? Or what if gathering all that data is impractical? That’s where transfer learning comes in. Here's how it helps:

Reduces the Need for Large Datasets

One of the biggest benefits of transfer learning is that it significantly reduces the need for large datasets. Since the AI model has already been pre-trained on a large, diverse dataset (like ImageNet), it can leverage that existing Knowledge when applied to a new task. Instead of starting from scratch, the model uses what it has learned to make sense of new, smaller datasets. It means less data is needed for the model to perform effectively on a new task.

For instance, a model trained on a broad set of images (including animals, cars, etc.) might only need a few hundred labeled images of a specific animal to identify it with high accuracy.

Speeds Up Training

Training a deep learning model from scratch can take days or even weeks, depending on the complexity of the task and the amount of data. Transfer learning helps speed up the training process by allowing the model to start with already learned features from a previous model. It enables quicker training times as the model needs less time to learn specific features related to the new task.

For example, in a medical imaging scenario, a model trained to recognize a variety of general patterns in images (like textures, shapes, and edges) can transfer that Knowledge to help with recognizing specific diseases in medical scans. The model won’t have to learn all the low-level features from scratch, which accelerates the training process.

How Transfer Learning Works

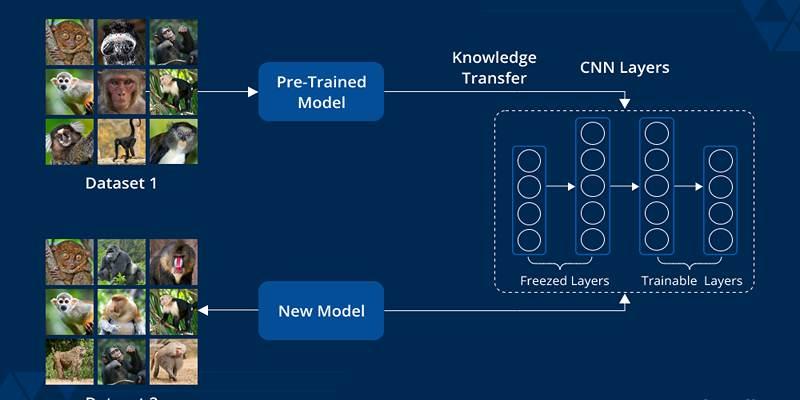

To understand how transfer learning works, let’s break it down into the following steps:

- Pretraining: First, a model is trained on a large dataset for a general task, such as image classification, using millions of labeled images. During this process, the model learns to identify patterns and features that are applicable across many types of images (e.g., edges, shapes, and textures).

- Fine-tuning: Once pretraining is completed, the model is adapted to a new, smaller dataset. The model’s parameters are adjusted, or “fine-tuned,” to make it more suitable for the new task. This step requires significantly less data than training a model from scratch.

- Adaptation: The model’s layers are adjusted to focus on the relevant features for the new task. For instance, if the original model was trained to recognize general objects like dogs and cats, it may be fine-tuned to identify specific breeds of dogs using a smaller, specialized dataset.

Real-World Applications of Transfer Learning

Transfer learning is already widely used in various fields. Here are a few examples:

Computer Vision and Image Recognition

Transfer learning has revolutionized the way AI handles image recognition. Models like ResNet, VGG, and Inception are pre-trained on large datasets like ImageNet, and they can be fine-tuned to detect specific objects, faces, or even medical conditions in images, all with limited data.

Natural Language Processing (NLP)

In NLP, models like BERT and GPT have been pre-trained on vast amounts of text data. They can be fine-tuned to perform various tasks, such as sentiment analysis, language translation, or chatbot functionality. These models require far less data to adapt to new languages or specialized tasks.

Healthcare and Medical Imaging

In healthcare, AI models trained on general medical image datasets can be adapted to detect specific conditions like tumors, organ abnormalities, or other diseases. It allows healthcare providers to implement AI-driven tools with reduced data requirements.

Conclusion

Transfer learning is a powerful technique that helps AI systems learn faster, more efficiently, and with less data. By leveraging Knowledge from pre-trained models, AI can adapt to new tasks quickly and with minimal data, making it an essential tool in the development of AI systems. Whether it’s in image recognition, language processing, or medical diagnosis, transfer learning is accelerating the progress of AI, enabling it to tackle complex challenges with less effort and fewer resources.